More AI is not possible 🙅🏽♂️ without Core AI

Everyone’s focused on AI models. But the real leverage is in what powers them.

In a market fixated on models and apps—and headlines about GPUs and shortages—it’s easy to miss where production AI is decided: infrastructure. Chips matter, but they are only one component. The foundational force that powers the entire AI economy lives deeper in the stack.

The decisive layer is the operational substrate—servers, compute fabrics, interconnects, orchestration, and data pipelines—where reliability, governance, and unit economics are made real. When infrastructure becomes invisible, reliable, and scalable, enterprises build on it. That is where durable advantage accrues.

More AI is not possible without Core AI (a.k.a. AI Infra)

This brief reframes the AI-infrastructure conversation from feeds and speeds to business outcomes and platform leverage. It outlines the stack, draws the GTM implications, and offers guiding principles for making AI Factory solutions strategically essential.

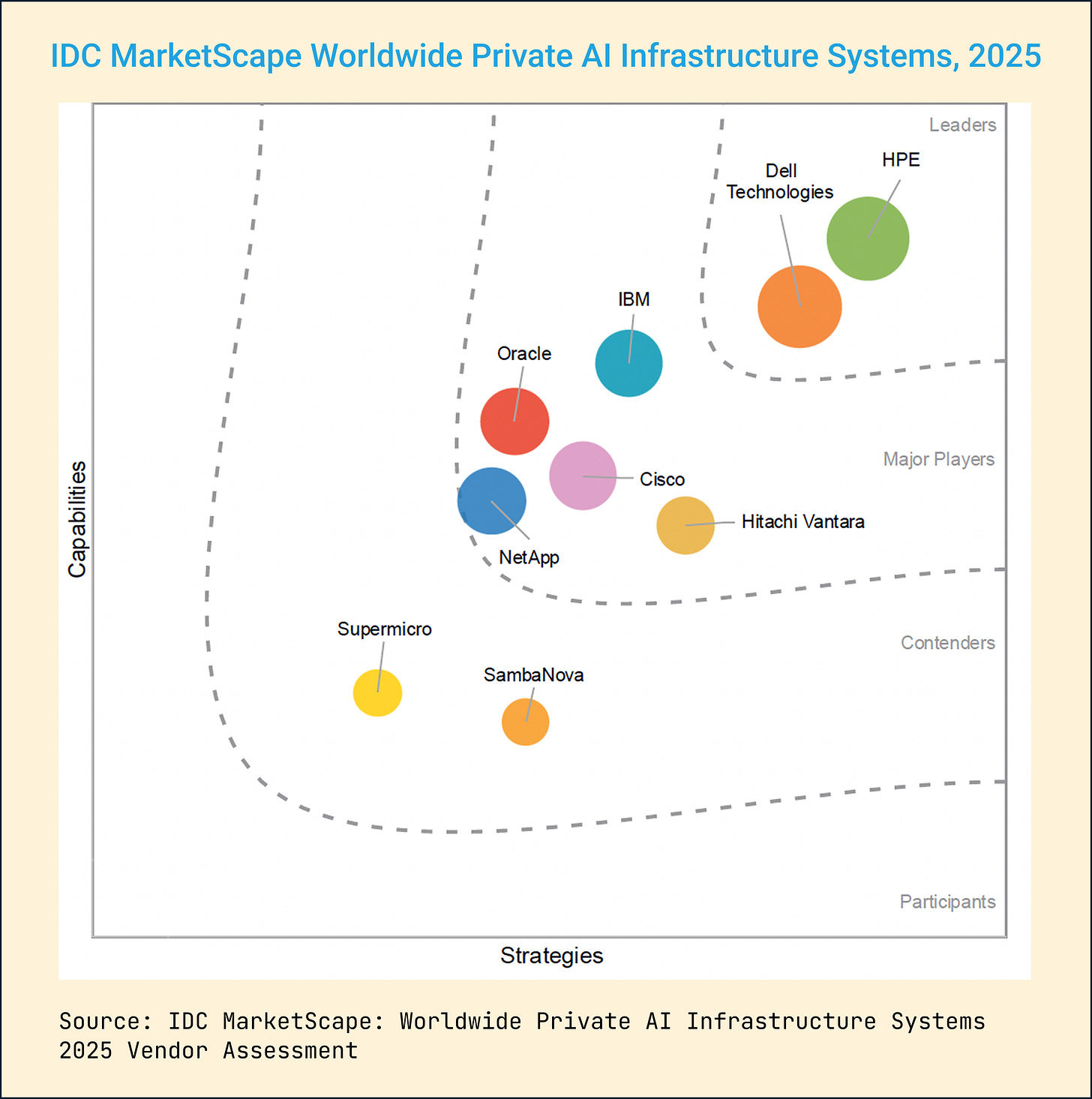

The focus here is on vendors building the physical and hybrid backbone of enterprise AI—from servers and fabrics to orchestration and on-prem deployment platforms.

Here’s a high-level perspective for AI-infrastructure providers rethinking narrative and GTM.

The Stack Everyone Sees (and the One They Don’t)

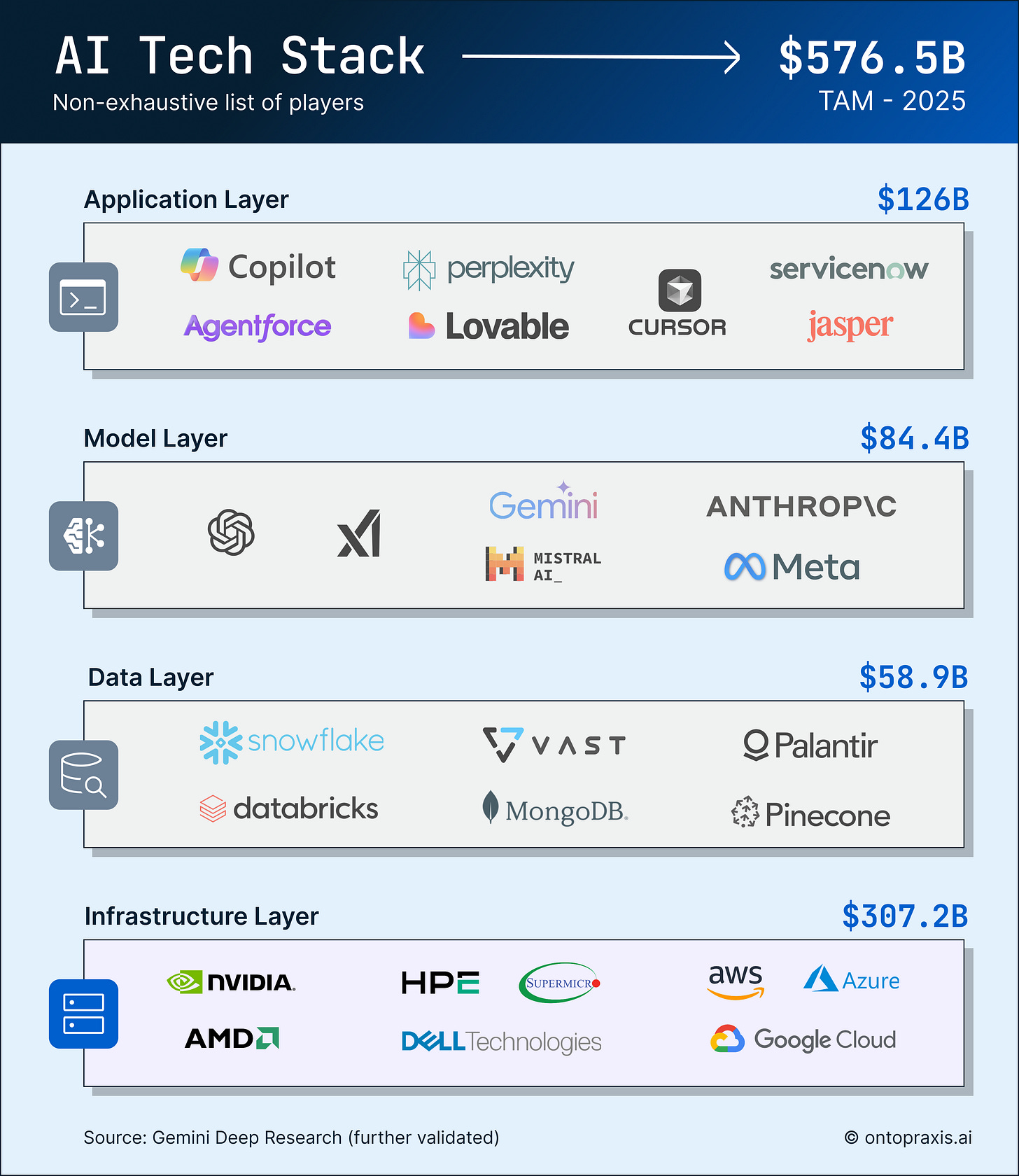

Disclaimer: The Total Addressable Market (TAM) figures presented herein are high-level estimates intended to illustrate the potential scope of the market. They are based on specific assumptions and publicly available data, which are subject to change. This information should not be construed as a factual representation or a guarantee of future results. We strongly advise conducting independent due diligence before making any business decisions based on this data.

Let’s start with the obvious. The AI stack is crowded and rapidly expanding. By the end of 2025, it’s projected to hit a $576.5B total addressable market across four major layers:

Applications: the visible layer where AI shows up—Copilot, Jasper, customer agents, and all the rest.

Models: the brains that power those applications—GPT, Gemini, Claude, Mistral.

Data: the fuel models need—platforms like Snowflake, Databricks, MongoDB.

Infrastructure: the foundation—servers, chips, networks, orchestration, and all the plumbing that holds the whole thing up.

Together, these layers form a massive market, and yet, the lion’s share of value is still flowing into infrastructure ($307 billion)—the layer that gets the least attention but carries the most weight.

What people think AI Infra is

If you say “AI infrastructure” in a room full of execs, you’ll get polite nods—and then they’ll tune out. Why? Because to many, it still means racks of servers, cloud contracts, or a CapEx problem for someone else to worry about. It’s seen as plumbing. Necessary, yes—but not strategic.

Also, much of the newfound excitement around infrastructure has been chip-driven—focused on accelerators and memory specs. But raw compute doesn’t equal real-world deployment.

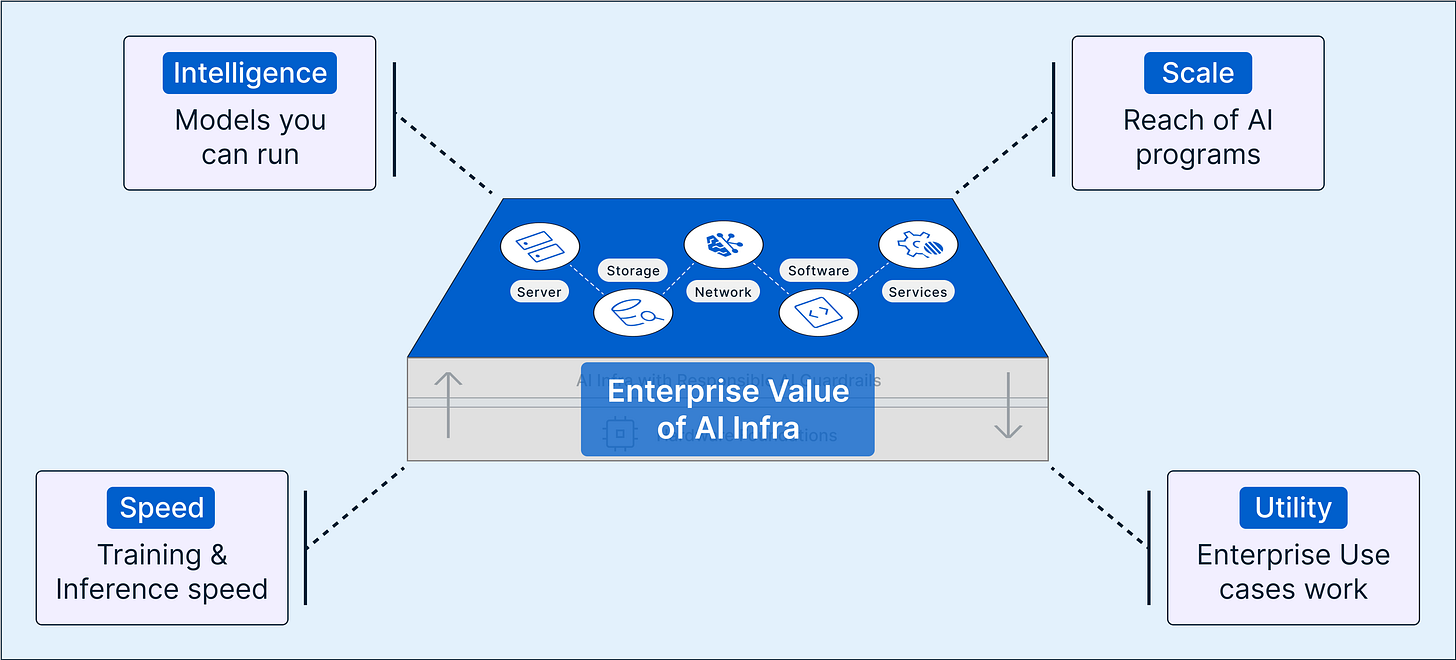

AI infrastructure is the platform layer. It’s what operationalizes those chips.

It determines:

Speed → how fast you can train and infer

Intelligence → what kind of models you can run

Utility → whether your enterprise use cases even work

Scale → how far and wide your AI programs can go

And most importantly, it defines where the value pools consolidate as adoption matures. Because chips don’t make AI deployable. Infrastructure does.

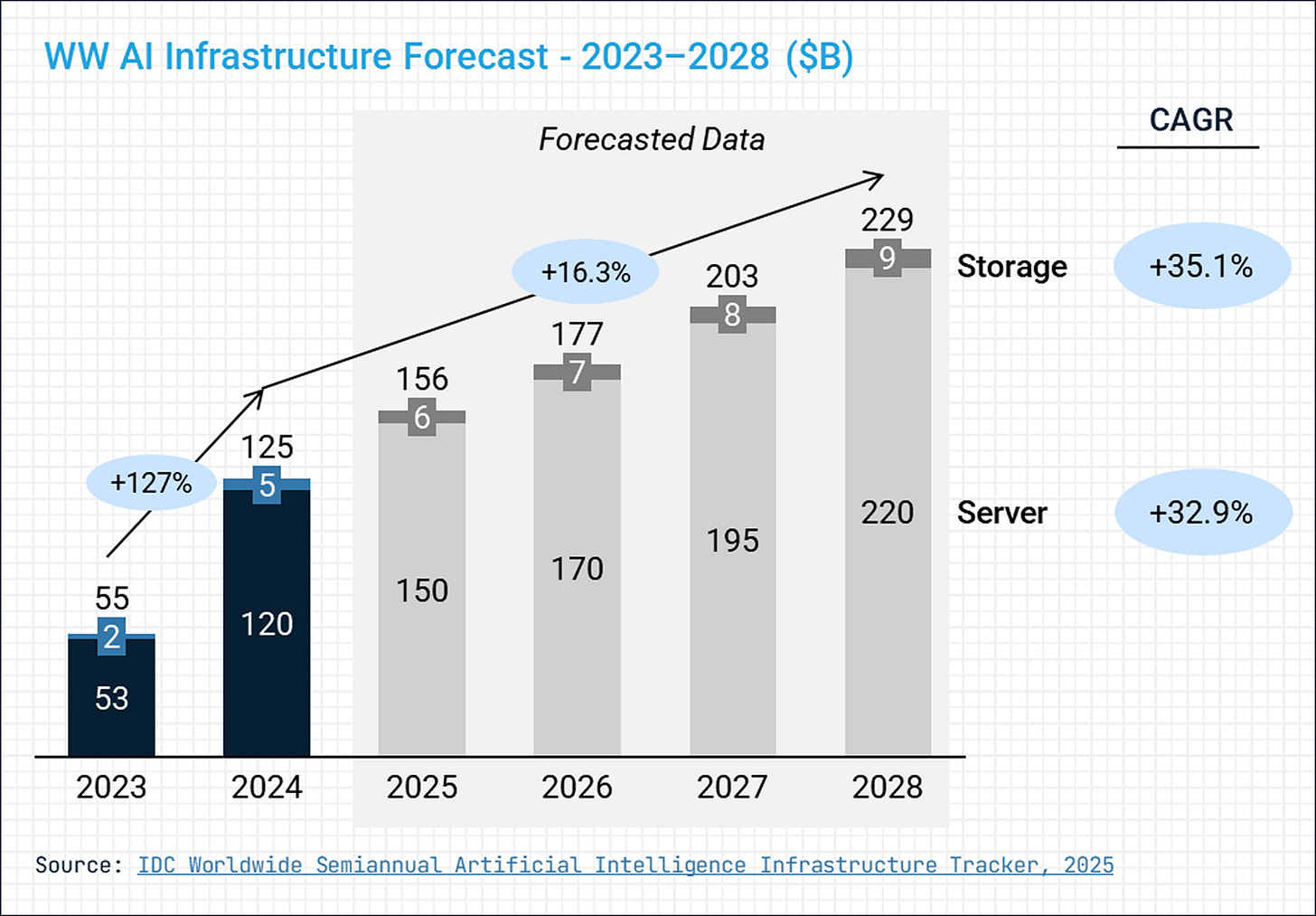

AI Infrastructure demand is surging

Enterprise adoption of AI is accelerating—but success hinges on the reliability, performance, and compliance of the infrastructure stack. 1 &2

Industry signals suggest three converging shifts that represent a significant market opportunity for infrastructure players:

Scale of demand: As per IDC, the AI-centric infrastructure market is expected to grow from $55B in 2023 to over $229B in 2028, driven by AI model training, inference, and edge deployments.

💡 From Dell Technologies, Q1 FY26 Earnings:

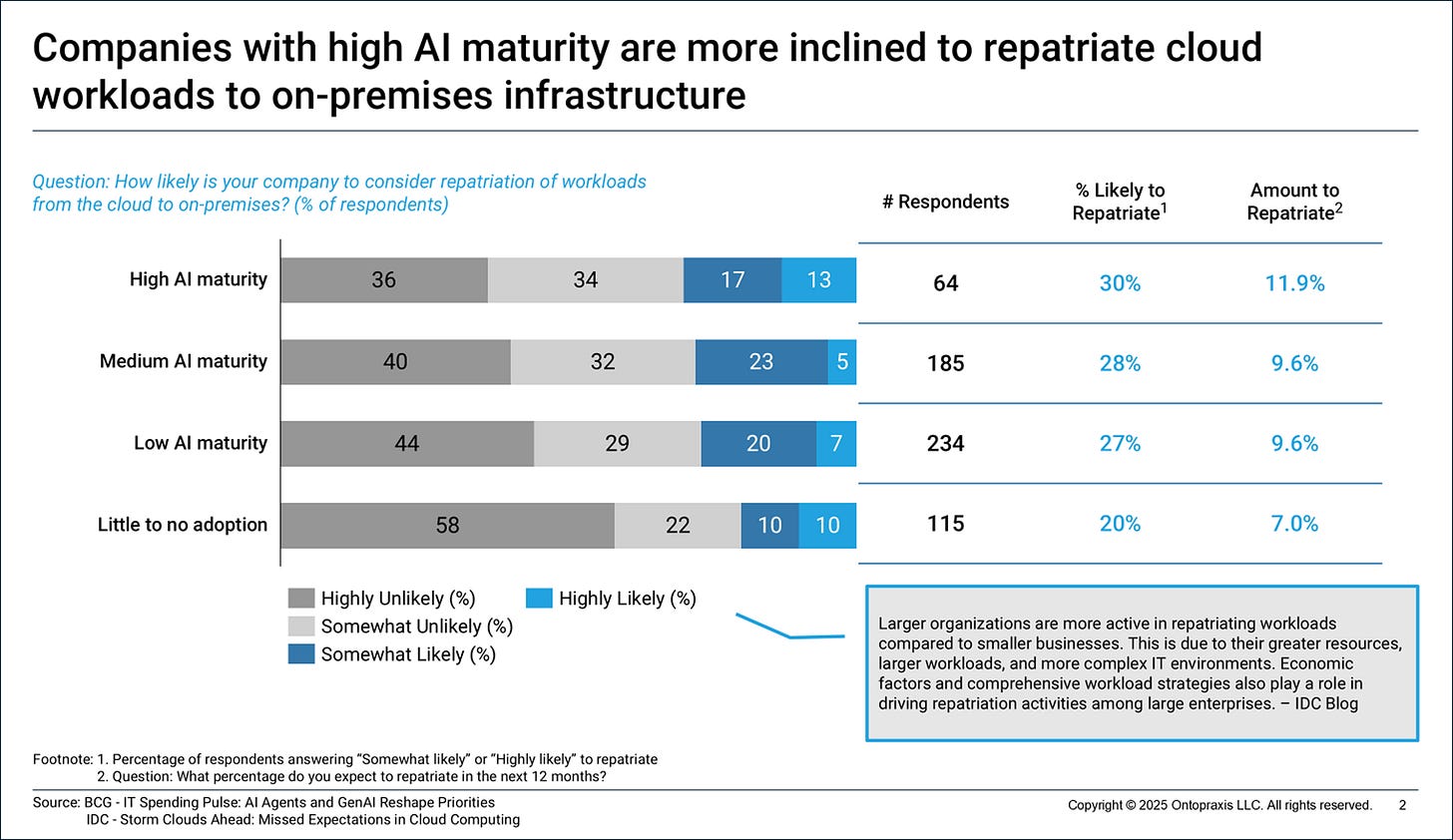

Architecture diversification: Enterprises are moving beyond cloud-first models. According to a BCG study, 30% of companies with high AI maturity are planning to repatriate workloads from the cloud to on-premises, citing cost, latency, and data sovereignty concerns.

Stack complexity: Infrastructure decisions now span compute, networking, storage, orchestration, model integration, and responsible AI. Decision-makers seek integrated platforms over point solutions.

These dynamics create an opportunity for infrastructure providers, especially those offering secure, sovereign, and scalable platforms tailored to AI use cases.

Re-think your GTM strategy and positioning

What does this mean for AI infrastructure vendors, especially those building servers, networking, storage, cloud, or hybrid environments?

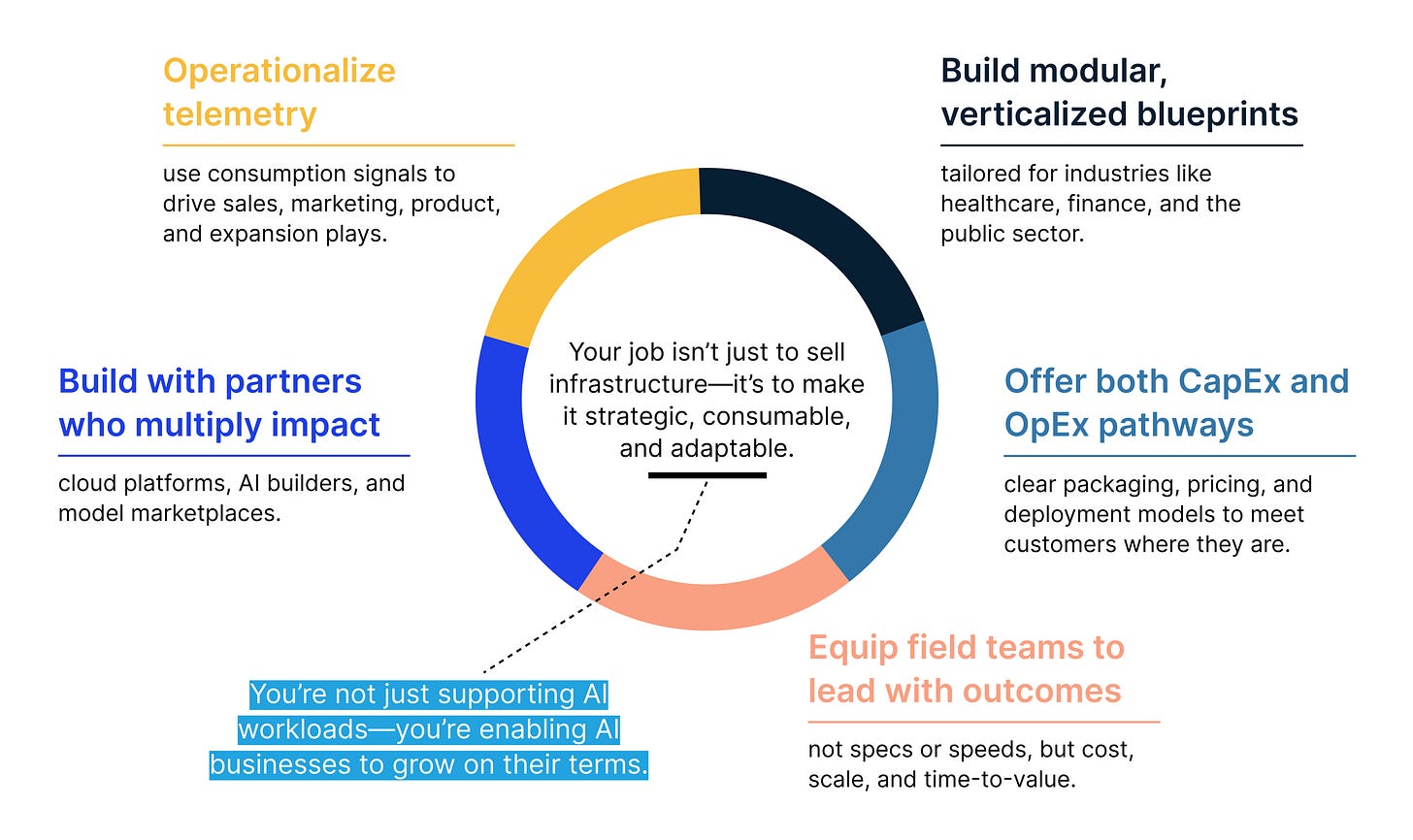

It means letting go of "feeds and speeds" and embracing a new identity: as strategic enablers of AI value.

Winning in this market isn’t about just shipping hardware. It’s about delivering outcomes. That’s why vendors need to evolve into growth enablers—powering not just AI workloads, but the business transformations those workloads unlock.

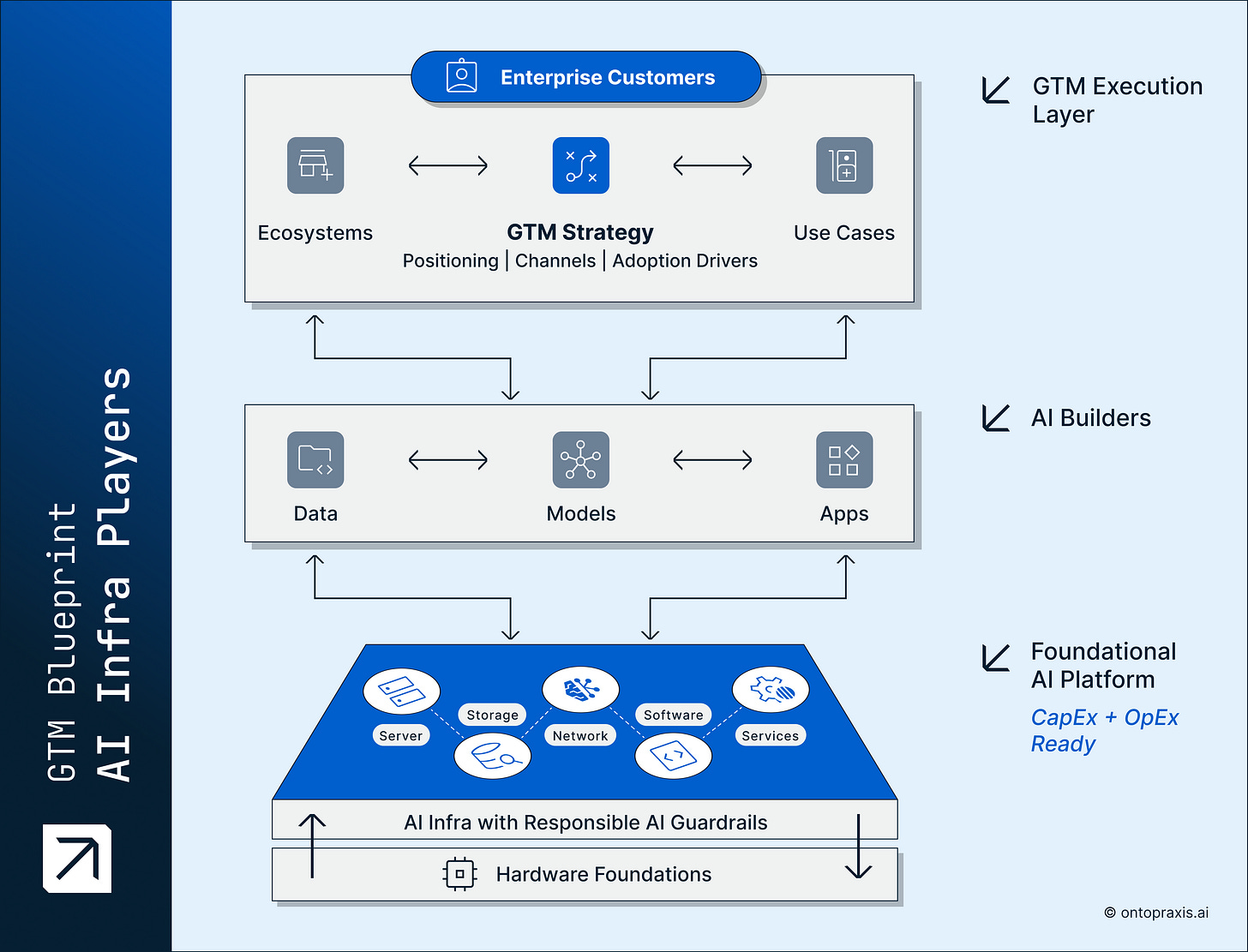

To get there, we propose a GTM model built around flexible deployment and usage-aligned growth—across CapEx and OpEx paths.

Foundational infrastructure layer

This is the engine room—the operational heart of AI. And it’s no longer just about raw compute or static capacity. Today’s AI infrastructure must be both provisioned and programmable, supporting enterprises that want to own their deployment (CapEx) and those that want to scale dynamically (OpEx).

That’s the opportunity: infrastructure becomes not just a platform, but a pricing and deployment strategy. By offering both traditional CapEx models and modern, usage-based options—backed by real-time telemetry and elastic controls—you meet customers where they are and grow with them as needs evolve.

CapEx gives control. OpEx gives agility. Your platform offers both.

Key tenets of this layer:

Go beyond hardware: integrate orchestration, AI-ready services, and hybrid deployment support

Offer deployment optionality: on-prem CapEx, hybrid, or cloud OpEx

Embed usage-awareness even in CapEx deployments (e.g., for visibility and optimization)

Wrap it all in Responsible AI guardrails: security, compliance, and auditability

Build trust by aligning spend with value—whether paid upfront or scaled over time

AI Builder layer

This is where innovation happens—from first fine-tune to full-scale deployment. Builders today want freedom: to build quickly, scale predictably, and avoid vendor lock-in. Whether they’re operating on a cloud budget or building on dedicated infrastructure, your GTM needs to empower both modes.

With OpEx, that means usage dashboards, credit-based onboarding, and real-time spend tracking. With CapEx, it means performance tuning tools, optimized configs, and APIs that make private deployments feel cloud-native.

Key tenets of this layer:

Equip builders with SDKs, APIs, and model deployment frameworks

Support usage-aware workflows even in fixed-cost environments

Let teams optimize performance and cost across both billing models

Remove friction: builders should be focused on innovation, not infrastructure procurement

GTM execution layer

This is where you align infrastructure with real business outcomes. And just like your customers’ workloads, your GTM model should scale across commercial contexts—whether that's a CFO signing a multi-year infrastructure investment or a business unit testing use cases on variable pricing.

Your GTM motion should speak to both finance and dev—because buying happens across both. In one motion, you might land a CapEx-heavy deal with a sovereign AI deployment. In another, you may guide a FinOps team toward usage-based scale tied to enterprise OKRs.

Key tenets of this layer:

Position your GTM around customer choice: pay up front, or grow as you go

Use telemetry and workload insights to expand intelligently—across both models

Co-sell with partners, but retain visibility into usage and outcomes

Track and measure value through adoption, not just bookings

CapEx or OpEx – Consumption powers both

Too often, “consumption” is framed as just an OpEx or cloud play. But in this GTM model, consumption is a unifier—a feedback mechanism that connects all layers and pricing models.

CapEx customers still benefit from usage visibility, optimization, and workload observability

OpEx customers scale naturally, with pricing that matches impact

GTM teams use the same telemetry to drive upsell, adoption, and retention—regardless of the commercial model

It’s not either/or. It’s the power of And.

CapEx control. OpEx agility. Your infra stack delivers both—with intelligence built in.

If you lead GTM at an AI Infrastructure vendor

The opportunity ahead

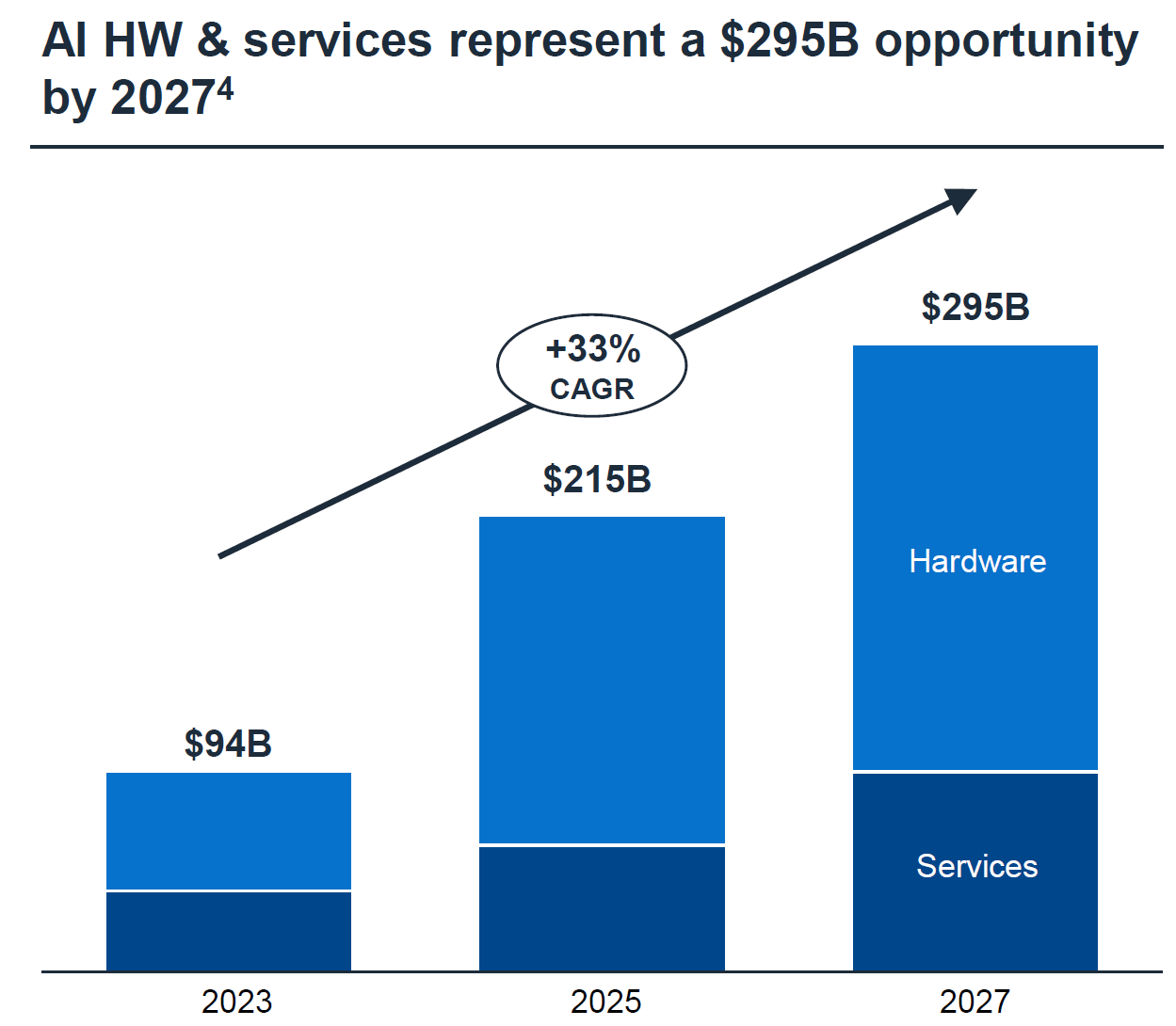

This moment isn’t about faster chips or bigger clusters. It’s about repositioning infrastructure—from a cost center to a visible lever for AI delivery and enterprise value.

“Data centers are experiencing a surge driven by GenAI, with spending on AI optimized servers, which was virtually nonexistent in 2021, expected to triple that of traditional servers by 2027”

John Lovelock, Distinguished VP Analyst, Gartner

Smart infrastructure vendors don’t need to outscale the hyperscalers.

Instead, they’ll differentiate where enterprise buyers feel the most pain: deployment flexibility, cost predictability, data governance, and usage-aligned growth.

Because the next phase of AI isn’t powered by hype. It’s powered by usage.

And usage starts with infrastructure that flexes with the workload, the buyer, and the business.

https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-a-data-center

https://www.gartner.com/en/newsroom/press-releases/2025-07-15-gartner-forecasts-worldwide-it-spending-to-grow-7-point-9-percent-in-2025